You Can’t Handle the Truth: How Confirmation Bias Distorts Your Opinions

The most difficult subjects can be explained to the most slow-witted man if he has not formed any idea of them already; but the simplest thing cannot be made clear to the most intelligent man if he is firmly persuaded that he knows already, without a shadow of doubt, what is laid before him.

– Leo Tolstoy

Your thoughts, opinions, beliefs, and worldviews are based on years and years of experience, reading, and rational, objective analysis.

Right?

Wrong.

Your thoughts, opinions, beliefs, and worldviews are based on years and years of paying attention to information that confirmed what you already believed while ignoring information that challenged your preconceived notions.

If there’s a single lesson that life teaches us, it’s that wishing doesn’t make it so.

– Lev Grossman

Like it or not, the truth is that all of us are susceptible to falling into a sneaky psychological trap called confirmation bias.

One of the many cognitive biases that afflict humans, confirmation bias refers to our tendency to search for and favor information that confirms our beliefs while simultaneously ignoring or devaluing information that contradicts our beliefs.

This phenomenon is also called confirmatory bias or myside bias.

It is a normal human tendency, and even experienced scientists and researchers are not immune.

Some things have to be believed to be seen. – Madeleine L’Engle

Here are two examples of confirmation bias in action, based on two commonly debated issues:

Climate change: Person A believes climate change is a serious issue and they only search out and read stories about environmental conservation, climate change, and renewable energy. As a result, Person A continues to confirm and support their current beliefs.

Person B does not believe climate change is a serious issue, and they only search out and read stories that discuss how climate change is a myth, why scientists are incorrect, and how we are all being fooled. As a result, Person B continues to confirm and support their current beliefs.

Gun control: Person A is in support of gun control. They seek out news stories and opinion pieces that reaffirm the need for limitations on gun ownership. When they hear stories about shootings in the media, they interpret them in a way that supports their existing beliefs.

Person B is adamantly opposed to gun control. They seek out news sources that are aligned with their position, and when they come across news stories about shootings, they interpret them in a way that supports their current point of view. (source)

Right now, as you are reading this – other examples of confirmation bias are probably starting to creep into your mind.

After all, it is election season in the US, a time for which an apt nickname would be “Confirmation Bias Season.”

In politics, confirmation bias explains, for example, why people with right-wing views read and view right-wing media and why people with left-wing views read and view left wing media. In general, people both:

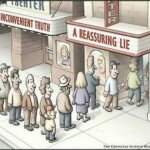

- Want to be exposed to information and opinions that confirm what they already believe.

- Have a desire to ignore, or not be exposed to, information or opinions that challenge what they already believe.

Even in cases where people do expose themselves to alternative points of view, it may be a form of confirmation bias; they want to confirm that the opposition is, indeed, wrong.

James Clear explains:

It is not natural for us to formulate a hypothesis and then test various ways to prove it false. Instead, it is far more likely that we will form one hypothesis, assume it is true, and only seek out and believe information that supports it. Most people don’t want new information, they want validating information.

Confirmation bias permeates political discussions. It is so pervasive that it largely goes unnoticed. We are used to it. It explains why political debates usually end in gridlock.

Facts do not cease to exist because they are ignored. – Aldous Huxley

Think about it: Have you noticed that people don’t want to hear anything negative about a candidate they’ve chosen to support? In many cases, it doesn’t matter what the facts are. Followers will resort to mental gymnastics – complete with cognitive flips and contortions – to justify continued support for their candidate.

In fact, when our deepest convictions are challenged by contradictory evidence, we may experience the “backfire effect.”

Coined by Brendan Nyhan and Jason Reifler, the term backfire effect describes how some individuals, when confronted with evidence that conflicts with their beliefs, come to hold their original position even more strongly.

The more ideological and the more emotion-based a belief is, the more likely it is that contrary evidence will be ineffective.

In the article The Backfire Effect, David McRaney explains that once something is added to your collection of beliefs, you will instinctively and unconsciously protect it from harm:

Just as confirmation bias shields you when you actively seek information, the backfire effect defends you when the information seeks you, when it blindsides you. Coming or going, you stick to your beliefs instead of questioning them.

This phenomenon is common during online debates, he says:

Most online battles follow a similar pattern, each side launching attacks and pulling evidence from deep inside the web to back up their positions until, out of frustration, one party resorts to an all-out ad hominem nuclear strike. If you are lucky, the comment thread will get derailed in time for you to keep your dignity, or a neighboring commenter will help initiate a text-based dogpile on your opponent.

What should be evident from the studies on the backfire effect is you can never win an argument online. When you start to pull out facts and figures, hyperlinks and quotes, you are actually making the opponent feel as though they are even more sure of their position than before you started the debate. As they match your fervor, the same thing happens in your skull. The backfire effect pushes both of you deeper into your original beliefs.

Confirmation bias has become even more prevalent because just about any belief can be “supported” with information found online.

Collecting evidence doesn’t always resolve confirmation bias: even when two individuals have the same information, the way they interpret it can be biased.

There are two different types of people in the world, those who want to know, and those who want to believe. – Friedrich Nietzsche

What happens when a person who holds a strong belief explores it and finds compelling evidence that it is incomplete or incorrect?

Either they discard the false belief or they ignore the evidence that disproves it. If the person refuses to change his belief even though it has been proven wrong, they are experiencing belief perseverance.

Belief perseverance is a psychological phenomenon that defines our tendency to maintain our original opinions in the face of overwhelming data that contradicts our beliefs. Everyone does it, but we are especially vulnerable when invalidated beliefs form a key part of how we narrate our lives, or when our beliefs define who we are.

Researchers have found that stereotypes, religious faiths, and even our self-concept are especially vulnerable to belief perseverance. A 2008 study in the Journal of Experimental Social Psychology found that people are more likely to continue believing incorrect information if it makes them look good (enhances self-image).

People face and dismiss contradictory evidence on a daily basis. For instance, if a man who believes that he is a good driver receives a ticket, he might reasonably feel that this single incident does not prove anything about his overall ability. If, however, a man who has caused three traffic accidents in a month believes that he is a good driver, it is safe to say that belief perseverance is at work.

Have you ever found yourself in a situation where you’re trying to change someone’s opinion about a social, scientific, or political issue, and you’re constantly met with failure because that person refuses to let go of his set views?

Belief perseverance can be so strong that that even if you provide irrefutable evidence that proves his ideas are wrong, he will simply continue to believe what he always has.

Faith: not wanting to know what the truth is. – Friedrich Nietzsche

Confirmation bias drives willful ignorance, which is the state and practice of ignoring any sensory input that appears to contradict one’s inner model of reality.

It differs from the standard definition of “ignorance” – which means that one is unintentionally unaware of something. Willfully ignorant people are fully aware of facts, resources, and sources, but intentionally refuse to acknowledge them.

Willful ignorance is sometimes referred to as tactical stupidity.

RationalWiki elaborates:

Depending on the nature and strength of an individual’s pre-existing beliefs, willful ignorance can manifest itself in different ways. The practice can entail completely disregarding established facts, evidence and/or reasonable opinions if they fail to meet one’s expectations. Often excuses will be made, stating that the source is unreliable, that the experiment was flawed or the opinion is too biased. More often than not this is simple circular reasoning: “I cannot agree with that source because it is untrustworthy because it disagrees with me.”

In other slightly more extreme cases, willful ignorance can involve outright refusal to read, hear, or study, in any way, anything that does not conform to the person’s worldview. With regard to oneself, this can even extend to fake locked-in syndrome with complete unresponsiveness. Or with regard to others, to outright censorship of the material from others.

Motivated reasoning – our tendency to accept what we want to believe with much more ease and much less analysis than what we don’t want to believe – takes confirmation bias and belief perseverance to the next level. Motivated reasoning leads us to confirm what we already believe, while ignoring contrary data.

As NYU psychologist Gary Marcus once explained:

Whereas confirmation bias is an automatic tendency to notice data that fit with our beliefs, motivated reasoning is the complementary tendency to scrutinize ideas more carefully if we don’t like them than if we do.

But motivated reasoning also drives us to develop elaborate rationalizations to justify holding beliefs that logic and evidence have shown to be wrong. It responds defensively to contrary evidence, actively discrediting such evidence or its source without logical or evidentiary justification. It is driven by emotion.

Social scientists believe that we employ motivated reasoning to help us avoid cognitive dissonance, which is an uncomfortable state that is experienced when we hold two or more contradictory beliefs, ideas, or values at the same time, perform an action that is contradictory to one or more beliefs, ideas or values, or are confronted by new information that conflicts with our existing beliefs, ideas, or values.

In other words, self-delusion feels good, and truth often hurts.

That’s what motivates people to vehemently defend obvious falsehoods.

Sometimes people don’t want to hear the truth because they don’t want their illusions destroyed. – Friedrich Nietzsche

The effect is stronger for emotionally charged issues and for deeply entrenched beliefs. People also tend to interpret ambiguous evidence as supporting their existing position.

Biased searches, interpretation, and memories are invoked to explain attitude polarization (when a disagreement becomes more extreme even though the different parties are exposed to the same evidence), belief perseverance, the irrational primacy effect (a greater reliance on information encountered early on), and illusory correlation (when people falsely perceive an association between two events or situations).

In the article Psychology’s Treacherous Trio: Confirmation Bias, Cognitive Dissonance, and Motivated Reasoning, Sam McNerney ties all of these concepts together:

People don’t change their minds – just the opposite in fact. Brains are designed to filter the world so we don’t have to question it. While this helps us survive, it’s a subjective trap; by only seeing the world as we want to, our minds narrow and it becomes difficult to understand opposing opinions.

When we only look for what confirms our beliefs (confirmation bias), only side with what is most comfortable (cognitive dissonance) and don’t scrutinize contrary ideas (motivated reasoning) we impede social, economic, and academic progress.

Confirmation bias, belief perseverance, and motivated reasoning can have serious, life-altering consequences.

Poor decisions due to these biases have been found in political, legal, scientific, medical, and organizational contexts.

Juries can hear information about cases and form opinions before they’ve had a chance to objectively evaluate the facts. The risk of this occurring is especially great in highly-publicized, emotionally-charged cases.

Police officers and prosecutors who think they’ve “got the right guy” can ignore evidence to the contrary, leading to wrongful convictions and the incarceration of (and sometimes, the execution of) innocent people.

One prominent example is the case of Marvin Anderson, who was convicted of robbery, abduction, and rape in 1982.

Mark D. White, Ph.D., recounts Anderson’s ordeal in the article Tunnel Vision in the Criminal Justice System:

…despite very weak evidence supporting the prosecution’s case, questionable eyewitness identification, and four alibi witnesses that testified to seeing him in the same place (nowhere near the crime). Twenty years later, DNA evidence conclusively proved that Anderson was innocent, and pointed to the true offender, Otis Lincoln; much evidence available during Anderson’s trial also indicated Lincoln was the likely attacker, but this was never investigated after Anderson was chosen as the main suspect. Even after Lincoln confessed, the judge who presided over Anderson’s trial refused to credit Lincoln’s confession (finding it false), and Anderson served out his prison term and parole until DNA testing conclusively identified Lincoln as the attacker.

White goes on to explain how this happened:

Confirmation bias led the various actors in the criminal justice system (from the investigating officers and detectives to the prosecutors to the trial judge), once they had focused on Anderson as the key suspect, to exaggerate the relevance of evidence supporting his guilt, and to downplay contradictory evidence supporting his innocence (namely, that which pointed away from Anderson and toward Lincoln).

So once a suspect like Anderson becomes the focus of an investigation, and evidence is gathered (and interpreted) to confirm that focus, in hindsight that decision will be regarded as inevitable and correct. Also, an eyewitness may only vaguely remember who she saw at the crime scene, but after her identification of a suspect like Anderson is confirmed, she’ll later think she remembered him better than she actually did at the time. Finally, the longer and more frequently that the authorities reaffirm Anderson’s guilt, the more confident they become in their original judgment, to the extent that even presented with DNA evidence and a confession by another suspect, Anderson’s judge was reluctant to set him free, since he’d been regarded as guilty for so long.

In science, you move closer to the truth by seeking evidence to the contrary. Perhaps the same method should inform your opinions as well. – David McRaney

Last year, a study from the University of Iowa found that once people reach a conclusion, they aren’t likely to change their minds, even when new information shows their initial belief is likely wrong and clinging to that belief costs real money. The researchers found that equity analysts who issue written forecasts about stocks may be subject to this confirmation bias and do not let new data significantly revise their initial analyses.

Phobias and hypochondria have also been shown to involve confirmation bias for threatening information.

Confirmation bias, belief perseverance, and motivated reasoning can impact foreign relations, leading to policy-making mistakes, and ultimately, in the most tragic cases, the death and destruction caused by unjust wars.

There are two ways to be fooled. One is to believe what isn’t true; the other is to refuse to believe what is true. – Søren Kierkegaard

Two possible explanations for the these biases include wishful thinking and the limited human capacity to process information.

Another explanation is that people show confirmation bias because they are weighing up the costs of being wrong, rather than investigating in a neutral, scientific way.

Now that we have identified these biases, how do we avoid letting them infiltrate our decision-making and belief systems?

The following techniques may help.

- Be open to new information and other perspectives. Don’t be afraid to test or revise your beliefs.

- Even if you consider yourself an expert on a topic, approach new information as a beginner would.

- Ask someone you trust to play devil’s advocate. Ask them to challenge your assumptions.

- Don’t let a limited amount of past experience (particularly one negative experience) carry too much weight. Be sure to envision the future, not just replay the past.

- Remind yourself that your intuition is lazy (designed to make predictions quickly, but not always accurately) and does not want to be challenged. Seek and fully evaluate other alternatives before making decisions.

- When you believe something strongly, but don’t have recent and compelling evidence to support that belief, look for more information.

- Check your ego. If you can’t stand to be wrong, you’re going to continue to fall victim to biases. Learn to value truth rather than the need to be right.

- Look for disagreement. If you’re right, then disagreement will help highlight this and if you’re wrong – it will help you identify why.

- Ask insightful, open-ended questions. Direct them to people who are not afraid to be honest with you. Be quiet and listen to what they say.

- Examine conflicting data. Discuss it with people who disagree with you and evaluate the evidence they present.

- Consider all the viewpoints that you can find – not just the ones that support your current beliefs or ideas.

If one were to attempt to identify a single problematic aspect of human reasoning that deserves attention above all others, the confirmation bias would have to be among the candidates for consideration.

Many have written about this bias, and it appears to be sufficiently strong and pervasive that one is led to wonder whether the bias, by itself, might account for a significant fraction of the disputes, altercations, and misunderstandings that occur among individuals, groups, and nations.

– Raymond S. Nickerson